环境

System: CentOS 7

tomcat: Apache tomcat/8.5.32

Nginx proxy: 192.168.10.100 node0.sundayle.com

tomcat node1: 192.168.10.101 node1.sundayle.com

tomcat node2: 192.168.10.102 node1.sundayle.com

tomcat 安装与配置

java安装

https://www.sundayle.com/2018/01/30/java/

2

ln -sv /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.171-8.b10.el7_5.x86_64 /usr/lib/jvm/latest

Java环境

2

3

4

5

6

export JAVA_HOME=/usr/lib/jvm/latest

export JRE_HOME=\$JAVA_HOME/jre

export PATH=\$PATH:/JRE_HOME/bin

export CLASSPATH=\$JRE_HOME/lib

EOF

source /etc/profile.d/java.sh

tomcat安装

2

3

4

wget http://mirrors.shu.edu.cn/apache/tomcat/tomcat-8/v8.5.32/bin/apache-tomcat-8.5.32.tar.gz

tar xf apache-tomcat-8.5.32.tar.gz -C /usr/local/tomcat

chown -R tomcat.tomcat /usr/local/tomcat

启动

启动脚本

1 |

|

或system1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23[Unit]

Description=Apache tomcat 8

After=syslog.target network.target

[Service]

Type=forking

User=tomcat

Group=tomcat

Environment=JAVA_HOME=/usr/lib/jvm/latest

Environment=CATALINA_PID=/usr/local/tomcat/temp/tomcat.pid

Environment=CATALINA_HOME=/usr/local/tomcat

Environment=CATALINA_BASE=/usr/local/tomcat

Environment='CATALINA_OPTS=-Xms4096M -Xmx4096M -server -XX:+UseParallelGC'

Environment='CATALINA_OPTS=-Dfile.encoding=UTF-8 -server -Xms2048m -Xmx2048m -Xmn1024m -XX:SurvivorRatio=10 -XX:MaxTenuringThreshold=15 -XX:NewRatio=2 -XX:+DisableExplicitGC'

Environment='JAVA_OPTS=-Djava.awt.headless=true -Djava.security.egd=file:/dev/./urandom'

ExecStart=/usr/local/tomcat/bin/startup.sh

ExecStop=/bin/kill -15 $MAINPID

Restart=on-failure

[Install]

WantedBy=multi-user.target

1 | systemctl enable tomcat |

shutdown.sh无法停止tomcat

vim /usr/local/tomcat/bin/shutdown.sh

默认值:(最后一行)

修改为

隐藏版本号

1 | # 解压修改信息 |

修改默认目录

1 | <!-- 修改 将默认目录/usr/local/webserver/tomcat/webapps修改成/data/webapps --> |

1 | root@web37:/data/webapps# ls -l |

manager 用户权限管理

1 | vim conf/tomcat-users.xml |

manager host-manager 403 解除ip限制

tomcat8.5之后的版本,已经增强远程登录安全过滤规则,默认不支持远程登录,需要修改配置文件。

修改文件

vim /usr/local/tomcat/webapps/manager/META-INF/context.xml

vim /usr/local/tomcat/webapps/host-manager/META-INF/context.xml

默认值:

1 | <Valve className="org.apache.catalina.valves.RemoteAddrValve" |

修改为:1

2<Valve className="org.apache.catalina.valves.RemoteAddrValve"

allow="192\.168\.10\.\d+|127\.\d+\.\d+\.\d+|::1|0:0:0:0:0:0:0:1" />

允许192.168.10.x 127.x.x.x访问。

catalina.sh stop && catalina.sh start

添加虚拟主机

mkdir /data/tomcat/ROOT -pv

vim /data/tomcat/ROOT/index.jsp

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

<html>

<head><title>tomcatA</title></head>

<body>

<h1><font color="blue">tomcatA.sundayle.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("sundayle.com","sundayle.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

默认目录的话,除 ROOT外,子目录(如app)可以使用 http://server_name:8080/app 访问

如修改目录位置配置,可以修改 Host name=”localhost”或添加 Host name=”node1.sundayle.com”

vim /usr/local/tomcat/conf/server.xml

2

3

4

5

6

7

8

9

10

11

<Engine name="Catalina" defaultHost="node2.sundayle.com">

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true">

</Host>

<Host name="node1.sundayle.com" appBase="/data/tomcat" unpackWARs="true" autoDeploy="true">

<Context path="" docBase="/data/tomcat/ROOT" debug="0" reloadable="false"/>

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="node1.sundayle.com_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

反向代理

Nginx反向代理tomcat

echo “192.168.10.101 node1.sundayle.com”

echo “192.168.10.102 node2.sundayle.com”

echo “192.168.10.100 www.sundayle.com"

cat /etc/Nginx/conf.d/tomcat_proxy.conf

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

hash $request_uri consistent;

#ip_hash;

#hash $cookie_name consistent;

server node1.sundayle.com:8080;

server node2.sundayle.com:8080;

}

server {

listen 80;

server_name www.sundayle.com;

location / {

proxy_pass http://tomcats;

}

}

systemctl restart nginx

Http反向代理tomcat

单机代理tomcat http

1 | <VirtualHost *:80> |

单机代理tomcat ajp

httpd -M | grep proxy_ajp_module #确保 proxy_ajp_module装载

cat /etc/httpd/conf.d/tomcat_ajp_proxy.conf

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

ServerName node0.sundayle.com

DocumentRoot "/data/tomcat"

ProxyRequests Off

ProxyPreserveHost On

ProxyPass / ajp://node1.sundayle.com:8009/

ProxyPassReverse / ajp://node1.sundayle.com:8009/

<Location />

Require all granted

</Location>

<Proxy *>

Require all granted

</Proxy>

</VirtualHost>

负载均衡tomcat集群

1 | <Proxy balancer://tomcatcs> |

负载均衡tomcat集群会话粘性及开启状态

1 | Header add Set-Cookie "MYID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED |

http代理解释

1 | ProxyRequests Off # 关闭正向代理 |

tomcat 会话保持

Nginx+tomcat集群会话保持

Nginx

cat /etc/Nginx/conf.d/tomcat_lb_proxy.conf

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

ip_hash;

server node1.sundayle.com:8080;

server node2.sundayle.com:8080;

}

server {

listen 80;

server_name node0.sundayle.com;

location / {

proxy_pass http://tomcatcs;

proxy_set_header Host $proxy_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

tomcat1

mkdir /data/tomcat/ROOT -pv

vim /usr/local/tomcat/conf/server.xml

2

3

4

5

6

7

8

9

10

11

12

13

14

15

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host> #下列添加

<Host name="node1.sundayle.com" appBase="/data/tomcat" unpackWARs="true" autoDeploy="true">

<Context path="" docBase="/data/tomcat/ROOT" debug="0" reloadable="false"/>

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="node1.sundayle.com_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

</Engine>

cat /data/tomcats/ROOT/index.jsp

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

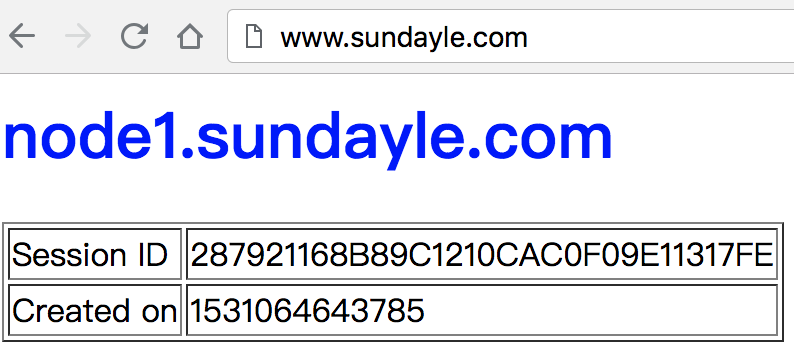

<html>

<head><title>node1</title></head>

<body>

<h1><font color="blue">node1.sundayle.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("sundayle.com","sundayle.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

scp /data/tomcat/ROOT/index.jsp root@node2.sundayle.com:/data/tomcat/ROOT/

tomcat2 1

2sed -i 's#node1#node2#g' /data/tomcat/ROOT/index.jsp

sed -i 's#blue#red#g' /data/tomcat/ROOT/index.jsp

访问node0.sundayle.com 最终效果为:通过调度器分配了一台tomcat服务器之后,除非IP变动,否则将一直调度在这台tomcat服务器上。

Httpd+tomcat集群会话保持

cat /etc/httpd/conf.d/tomcat_lb_proxy.conf

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

<Proxy balancer://tomcatcs>

BalancerMember http://node1.sundayle.com:8080 route=tomcatA loadfactor=5

BalancerMember http://node2.sundayle.com:8080 route=tomcatB loadfactor=2

ProxySet lbmethod=byrequests

ProxySet stickysession=MYID

</Proxy>

<VirtualHost *:80>

ServerName node0.sundayle.com

ProxyRequests Off

ProxyVia On

ProxyPreserveHost On

<Proxy *>

Require all granted

</Proxy>

ProxyPass / balancer://tomcatcs/

ProxyPassReverse / balancer://tomcatcs/

<Location />

Require all granted

</Location>

<Location /http-stats>

SetHandler balancer-manager

ProxyPass !

Require all granted

</Location>

</VirtualHost>

tomcat主机如同Nginx的tomcat无需修改配置,直接打开浏览器验证即可

tomcat集群原生会话复制

优点:

1 实现简单。

2 不需要使用共享数据库,节点少,降低了故障率。

缺点:

1 同步Session数据造成了网络带宽的开销。只要Session数据有变化,就需要将数据同步到所有其他机器上,机器越多,同步带来的网络带宽开销就越大。

2 每台Web服务器都要保存所有Session数据,如果整个集群的Session数据很多(很多人同时访问网站)的话,每台机器用于保存Session数据的内容占用会很严重。

实验之前,将nginx/httpd的会话保持的配置段删除,只保留调度功能1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19 upstream tomcatcs {

ip_hash;

server node1.sundayle.com:8080;

server node2.sundayle.com:8080;

}

server {

listen 80;

server_name 192.168.10.100;

server_name node0.sundayle.com;

location / {

proxy_pass http://tomcatcs;

proxy_set_header Host $proxy_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

tomcat server.xml

修改tomcat的server.xml文件,增加Cluster配置,如果定义在Engine容器中,则表示对所有主机均启动用集群功能。如果定义在某Host容器中,则表示仅对此主机启用集群功能。此外,需要注意的是,Receiver中的address=”auto”一项的值最好改为当前部署tomcat主机所对应的网络接口的IP地址。

http://tomcat.apache.org/tomcat-8.5-doc/cluster-howto.html1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="6">

<Manager className="org.apache.catalina.ha.session.BackupManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"

mapSendOptions="6"/>

<!--

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

-->

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="192.168.10.101" #修改本机ip

port="5000"

selectorTimeout="100"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatchInterceptor"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.ThroughputInterceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=".*\.gif|.*\.js|.*\.jpeg|.*\.jpg|.*\.png|.*\.htm|.*\.html|.*\.css|.*\.txt"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

java项目web.xml需要配置加入1

<distributable/>

这里可以复制tomcat web.xml来测试

cp /usr/local/tomcat/conf/web.xml /data/tomcat/ROOT/WEB-INF/web.xml

cat /data/tomcat/ROOT/WEB-INF/web.xml #tomcat node2同样操作

2

3

4

<servlet>

<servlet-name>jsp</servlet-name>

<servlet-class>org.apache.jasper.servlet.JspServlet</servlet-class>

tomcat + Memcached 会话保持

tomcat+memcached实现分布式session共享,使用的是开源的memcached-session-manager,一个开源的高可用session解决方案。

https://github.com/magro/memcached-session-manager/wiki/SetupAndConfiguration

java默认序列化sticky sessions配置

1 | yum install -y memcached |

1 | PORT="11211" # 监听端口 |

1 | systemctl start memcached |

下载jar到/usr/local/tomcat/lib目录

memcached-session-manager

memcached-session-manager-tc8

spymemcached

1 | cd /usr/local/tomcat/lib |

在<Context> </Context>中添加

vim /usr/local/tomcat/conf/context.xml

2

3

4

5

6

memcachedNodes="n1:192.168.10.101:11211,n2:192.168.10.102:11211"

failoverNodes="n1"

requestUriIgnorePattern=".*\.(ico|png|gif|jpg|css|js)$"

transcoderFactoryClass="de.javakaffee.web.msm.JavaSerializationTranscoderFactory"

/>

scp /usr/local/tomcat/conf/context.xml root@192.168.10.102:/usr/local/tomcat/conf/

Sticky session 粘性会话:参数failoverNodes=”n1” 是用来告诉 MSM 把session优先存储在 memcached2上,只有当memcached2挂了的时候才会把session存在memcache1,也就是配置文件的n1里。

假如tomcat1挂了,session还是可用,因为session是存在tomcat2的memcache2上,可以通过tomcat2对外提供服务。

tomcat2上的tomcat2的配置文件只要改成failoverNodes=”n2”即可。

tomcat + memcached + Redis 会话保持

java默认序列化non-sticky sessions配置

非粘性session是不需要配置failoverNodes,因为会话由所有tomcats循环服务

https://github.com/magro/memcached-session-manager/wiki/SetupAndConfiguration#example-for-non-sticky-sessions--kryo

下载jar到/usr/local/tomcat/lib目录

1 | cd /usr/local/tomcat/lib |

在<Context> </Context>中添加 1

2

3

4

5

6

7

8<Manager className="de.javakaffee.web.msm.MemcachedBackupSessionManager"

memcachedNodes="redis://192.168.10.101:6379"

sticky="false"

sessionBackupAsync="false"

lockingMode="uriPattern:/path1|/path2"

requestUriIgnorePattern=".*\.(ico|png|gif|jpg|css|js)$"

transcoderFactoryClass="de.javakaffee.web.msm.JavaSerializationTranscoderFactory"

/>

tomcat + Redis 会话保持

https://www.jianshu.com/p/aa9f71d653af

tomcat获取用户IP地址

在tomcat配置文件/conf/server.xml下配置1

2

3

4

5

6

7<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log" suffix=".txt"

pattern="%h %l %u %t %r %s %b" />

前面有负载均衡的时候,获取真实IP可以使用下面的配置

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log." suffix=".txt"

pattern="%{X-Forwarded-For}i %h %l %u %t %r %s %b" />

http://maxiecloud.com/2017/07/01/tomcat/

http://maxiecloud.com/2017/07/03/tomcat-session-remains/

http://tomcat.apache.org/tomcat-8.5-doc/index.html