OpenStack 概述 OpenStack项目是一个开源云计算平台,支持所有类型的云环境。该项目旨在实现简单,大规模的可扩展性和丰富的功能。

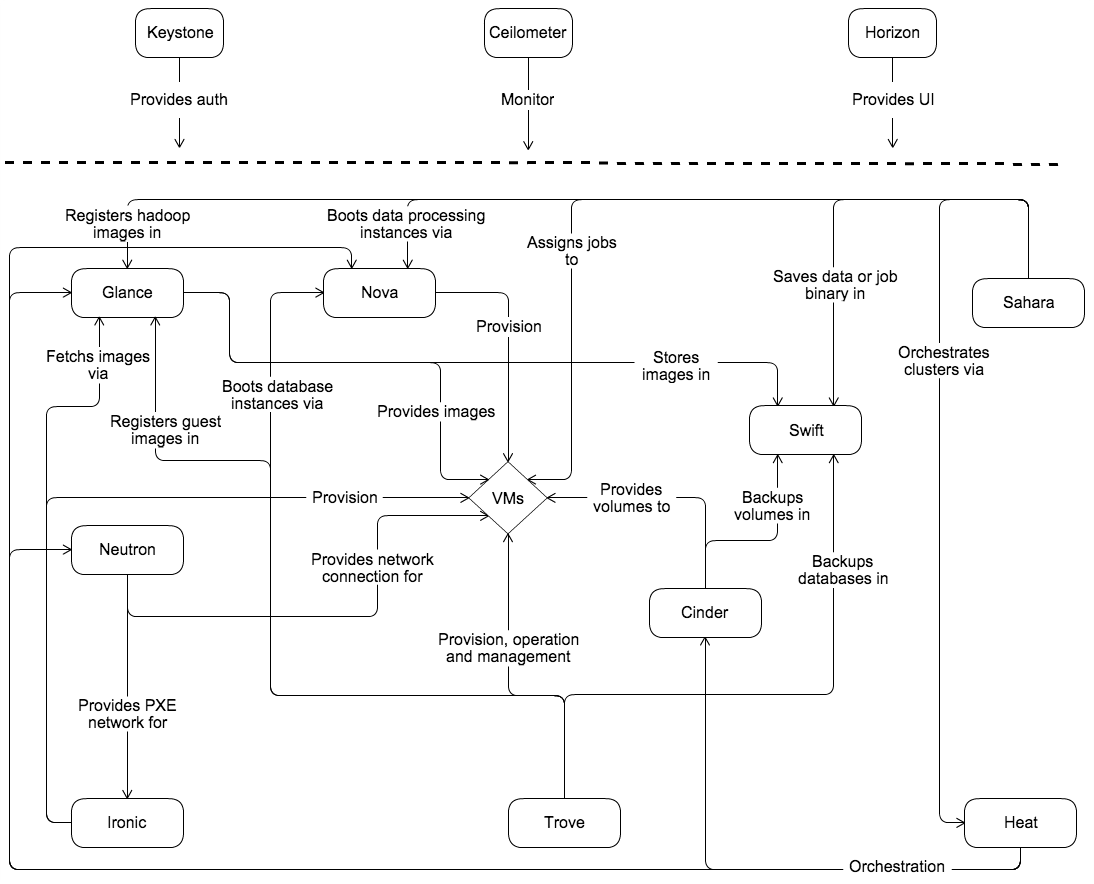

OpenStack 架构总览 概念性架构 下图显示了OpenStack服务之间的关系:

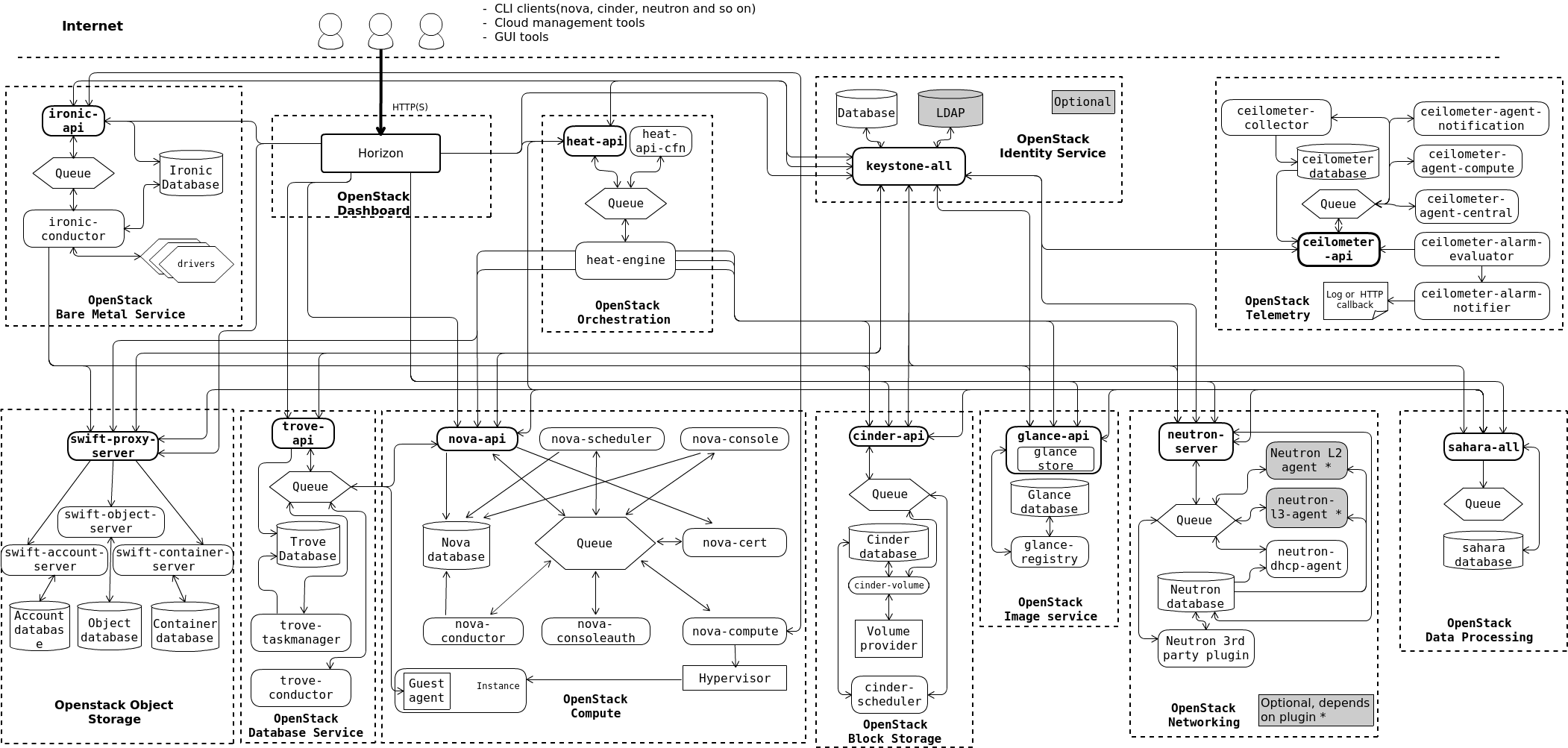

逻辑体系结构 下图显示了OpenStack云中最常见但不是唯一可能的体系结构:

各个服务通过公共API相互交互,除非需要特权管理员命令。

在内部,OpenStack服务由多个进程组成。所有服务都至少有一个API进程,它监听API请求,预处理它们并将它们传递给服务的其他部分。除身份服务外,实际工作由不同的流程完成。

用户可以通过Horizon Dashboard实现的基于Web的用户界面,通过命令行客户端以及通过浏览器插件或curl等工具发布API请求来访问OpenStack。对于应用程序,有几个SDK可用。最终,所有这些访问方法都会对各种OpenStack服务发出REST API调用。

解释

服务名称

项目名称

描述

Dashboard

Horizon

Web管理

Compute service

Nova

管理OpenStack环境中计算实例的生命周期

Networking service

Neutron

虚拟机的网络资源管理

Object Storage service

Swift

对象存储,适用于“一次写入、多次读取”

Block Storage service

Cinder

为运行的实例提供持久块存储

Identity service

Keystone

提供身份验证和授权服务

Image service

Glance

提供虚拟镜像的注册和存储管理

Telemetry service

Ceilometer

监控和计量OpenStack云,用于计费,基准测试,可扩展性和统计目的。

Orchestration service

Heat

自动化部署的组件

Database service

Trove

提供数据库应用服务

Data Processing service

Sahara

配置和扩展Hadoop集群的功能

密码

Password name

Description

Database password (no variable used)

Root password for the database

ADMIN_PASS

Password of user admin

CINDER_DBPASS

Database password for the Block Storage service

CINDER_PASS

Password of Block Storage service user cinder

DASH_DBPASS

Database password for the Dashboard

DEMO_PASS

Password of user demo

GLANCE_DBPASS

Database password for Image service

GLANCE_PASS

Password of Image service user glance

KEYSTONE_DBPASS

Database password of Identity service

METADATA_SECRET

Secret for the metadata proxy

NEUTRON_DBPASS

Database password for the Networking service

NEUTRON_PASS

Password of Networking service user neutron

NOVA_DBPASS

Database password for Compute service

NOVA_PASS

Password of Compute service user nova

PLACEMENT_PASS

Password of the Placement service user placement

RABBIT_PASS

Password of RabbitMQ user openstack

基础环境配置 系统: CentOS 7.4 64位

关闭防火墙等 所有节点执行1 2 3 systemctl stop NetworkManager systemctl stop firewalld setenforce 0

配置hostname和host hostname controller节点1 2 hostnamectl set-hostname controller bash

compute节点1 2 hostnamectl set-hostname compute bash

cinder节点1 2 hostnamectl set-hostname cinder bash

host 1 2 3 4 5 6 7 8 cat << EOF >> /etc/hosts 192.168 .7.11 controller192.168 .7.12 compute192.168 .7.13 cinderEOF scp /etc/hosts root@compute:/etc/ scp /etc/hosts root@cinder:/etc/

配置 NTP 安装配置chrony 在controller控制节点执行1 2 3 4 yum install -y chrony sed -i 's@#allow 192.168 .0 .0 /16 @allow 192.168 .0 .0 /16 @' /etc/chrony.conf systemctl start chronyd systemctl enable chronyd

1 2 3 4 5 6 7 grep -r ^[as] /etc/chrony.conf server 0.centos.pool.ntp.org iburst server 1.centos.pool.ntp.org iburst server 2.centos.pool.ntp.org iburst server 3.centos.pool.ntp.org iburst allow 192.168.0.0/16

在compute和cinder节点执行1 2 3 4 5 yum install -y chrony sed -i 's@server\(.*\)@#server\1@g' /etc/chrony.conf sed -i '3iserver controller iburst' /etc/chrony.conf systemctl start chronyd systemctl enable chronyd

1 2 3 grep -r ^[as] /etc/chrony.conf server controller iburst

验证时钟同步服务 在controller控制节点执行1 2 3 4 5 6 7 8 [root@controller ~]# chronyc sources 210 Number of sources = 4 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^- time5.aliyun.com 2 9 377 457 +467 us[ +550 us] +/- 21 ms ^- makaki.miuku.net 2 9 317 501 +674 us[ +756 us] +/- 213 ms ^- ntp1.ams1.nl.leaseweb.net 2 7 375 319 +7787 us[+7872 us] +/- 248 ms ^* time4.aliyun.com 2 10 377 126 +310 us[ +400 us] +/- 5895 us

在compute cinder节点执行1 2 3 4 5 [root@compute ~]# chronyc sources 210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* controller 3 6 177 40 +4835ns[ + 12us] +/- 6255us

安装OpenStack 源 1 2 3 4 5 mv -f /etc/yum.repos .d /CentOS-Base.repo /etc/yum.repos .d /CentOS-Base.repo .backup wget -O /etc/yum.repos .d /CentOS-Base.repo http: yum install epel-release yum makecache yum upgrade

openstack官方源:yum install https://rdoproject.org/repos/rdo-release.rpm -y

安装OpenStack 包 所有节点执行1 2 3 4 yum install -y centos-release-openstack-queens yum upgrade -y #如果有内核的更新,则需要reboot重启一下系统 yum install -y python-openstackclient yum install openstack-selinux -y

MySQL 数据库 在controller控制节点执行https://docs.openstack.org/install-guide/environment-sql-database-rdo.html

安装Mariadb 1 yum install -y mariadb mariadb-server python2-PyMySQL

配置MySQL参数 1 2 3 4 5 6 7 8 9 10 cat << EOF > /etc/my.cnf.d/openstack.conf [mysqld] bind-address = 192.168 .7 .11 default -storage-engine = innodbinnodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8 EOF

启动数据库服务,并将其配置为开机自启 1 2 systemctl enable mariadb .service systemctl restart mariadb .service

mysql_secure_installation 为了保证数据库服务的安全性,运行mysql_secure_installation脚本。特别需要说明的是,为数据库的root用户设置一个适当的密码1 mysql_secure_installation

RabbitMQ 消息队列 在 controller 执行https://docs.openstack.org/install-guide/environment-messaging-rdo.html

安装 RabbitMQ 1 yum install rabbitmq-server -y

启动消息队列服务并将其配置为随系统启动 1 2 systemctl enable rabbitmq-server .service systemctl start rabbitmq-server .service

添加 openstack 用户 1 rabbitmqctl add_user openstack RABBIT_PASS

给openstack用户配置写和读权限 1 rabbitmqctl set _permissions openstack ".*" ".*" ".*"

rabbitmq监控(可省略)1 2 3 4 5 rabbitmq-plugins list rabbitmq-plugins enable rabbitmq_management 访问路径:http: Username:guest Password:guest

Memcached 在 controller 执行https://docs.openstack.org/install-guide/environment-memcached.html

安装memcached 1 yum install memcached python-memcached -y

修改OPTIONS IP 1 sed -i 's#127.0 .0 .1 #192.168 .7 .11 #g' /etc/sysconfig/memcached

1 2 3 4 5 6 7 cat /etc/sysconfig/memcached PORT="11211" USER="memcached" MAXCONN="1024" CACHESIZE="64" OPTIONS="-l 192.168.7.11,::1"

启动Memcached服务,并且配置它随机启动 1 2 systemctl enable memcached .service systemctl start memcached .service

Etcd 在 controller 执行https://docs.openstack.org/install-guide/environment-etcd.html

安装etcd etcd配置 快速处理脚本,注意ip为controller1 2 3 4 5 6 7 8 sed -i 's@#ETCD_LISTEN_PEER_URLS="http://localhost:2380"@ETCD_LISTEN_PEER_URLS="http://192.168.7.11:2380"@' /etc/etcd/etcd.conf sed -i 's@ETCD_LISTEN_CLIENT_URLS="http://localhost:2379"@ETCD_LISTEN_CLIENT_URLS="http://192.168.7.11:2379"@' /etc/etcd/etcd.conf sed -i 's@ETCD_NAME="default"@ETCD_NAME="controller"@' /etc/etcd/etcd.conf sed -i 's@#ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380"@ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.7.11:2380"@' /etc/etcd/etcd.conf sed -i 's@ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379"@ETCD_ADVERTISE_CLIENT_URLS="http://192.168.7.11:2379"@' /etc/etcd/etcd.conf sed -i 's@#ETCD_INITIAL_CLUSTER="default=http://localhost:2380"@ETCD_INITIAL_CLUSTER="controller=http://192.168.7.11:2380"@' /etc/etcd/etcd.conf sed -i 's@#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"@ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"@' /etc/etcd/etcd.conf sed -i 's@#ETCD_INITIAL_CLUSTER_STATE="new"@ETCD_INITIAL_CLUSTER_STATE="new"@' /etc/etcd/etcd.conf

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 cat /etc/etcd/etcd.conf #[Member] ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="http://192.168.7.11:2380" ETCD_LISTEN_CLIENT_URLS="http://192.168.7.11:2379" ETCD_NAME="controller" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.7.11:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.7.11:2379" ETCD_INITIAL_CLUSTER="controller=http://192.168.7.11:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

启动etcd服务并开机启动 1 2 systemctl enable etcd systemctl start etcd

Keystone 认证服务 在 controller 执行https://docs.openstack.org/keystone/queens/install/keystone-install-rdo.html

创建keystone数据库并授权 1 2 3 4 mysql -u root -p CREATE DATABASE keystone;GRANT ALL PRIVILEGES ON keystone.* TO 'keystone' @'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS' ;GRANT ALL PRIVILEGES ON keystone.* TO 'keystone' @'%' IDENTIFIED BY 'KEYSTONE_DBPASS' ;

安装 keystone httpd Apache HTTP认证服务器1 yum install openstack-keystone httpd mod_wsgi openstack-utils -y

keystone.conf配置 快速处理脚本1 2 openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql: openstack-config --set /etc/keystone/keystone.conf

手动编辑1 2 3 4 5 6 7 cat /etc/keystone/keystone.conf [database] connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone [token] provider = fernet

初始化keystone数据库 1 su -s /bin/sh -c "keystone-manage db_sync" keystone

初始化Fernet keys 1 2 keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

初始化身份认证服务 bootstrap服务,用于api访问1 2 3 4 5 keystone-manage bootstrap --bootstrap-password ADMIN_PASS \ --bootstrap-admin-url http: //controller:35357/v 3/ \ --bootstrap-internal-url http: //controller:5000/v 3/ \ --bootstrap-public-url http: //controller:5000/v 3/ \ --bootstrap-region-id RegionOne

配置httpd 修改ServerName 快速处理脚本1 sed -i '/#ServerName www.example.com:80/iServerName controller' /etc/ httpd/conf/ httpd.conf

1 2 grep ^ServerName /etc/ httpd/conf/ httpd.confServerName controller

创建软链接/usr/share/keystone/wsgi-keystone.conf 1 ln -s /usr/ share/keystone/ wsgi-keystone.conf /etc/ httpd/conf.d/

启动 Apache HTTP 服务并配置其随系统启动 1 2 systemctl enable httpd .service systemctl start httpd .service

配置管理帐户 1 2 3 4 5 6 7 export OS_USERNAME =adminexport OS_PASSWORD =ADMIN_PASSexport OS_PROJECT_NAME =adminexport OS_USER_DOMAIN_NAME =Defaultexport OS_PROJECT_DOMAIN_NAME =Defaultexport OS_AUTH_URL =http://controller:35357/v3export OS_IDENTITY_API_VERSION =3

创建域、项目、用户和角色 创建域 已存在“默认”域,但创建新域的正式方法是1 2 3 4 5 6 7 8 9 10 11 openstack domain create --description "An Example Domain" example +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | An Example Domain | | enabled | True | | id | 4e07cb0139e947a7b62479073806f305 | | name | example | | tags | [] | +-------------+----------------------------------+

创建Service项目 1 2 3 4 5 6 7 8 9 10 11 12 13 14 openstack project create --domain default --description "Service Project" service +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | Service Project | | domain_id | default | | enabled | True | | id | 6ba7281b4b784118a67641f304a6d8fc | | is_domain | False | | name | service | | parent_id | default | | tags | [] | +-------------+----------------------------------+

创建demo项目 1 2 3 4 5 6 7 8 9 10 11 12 13 14 openstack project create --domain default --description "Demo Project" demo +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | Demo Project | | domain_id | default | | enabled | True | | id | fc0b58a87559424399e26f0e833f0429 | | is_domain | False | | name | demo | | parent_id | default | | tags | [] | +-------------+----------------------------------+

创建demo用户 1 2 3 4 5 6 7 8 9 10 11 12 13 14 openstack user create --domain default --password-prompt demo User Password: #密码配置:DEMO_PASS Repeat User Password: +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | d441c74843ea4da9a21f90499cb43fb5 | | name | demo | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+

创建用户角色 1 2 3 4 5 6 7 8 9 openstack role create user +-----------+----------------------------------+ | Field | Value | +-----------+----------------------------------+ | domain_id | None | | id | 9d2ec4ead71e4c46a3fc42b08eed1aba | | name | user | +-----------+----------------------------------+

将用户规则user附加给用户demo 1 openstack role add --project demo --user demo user

注:这个命令执行后没有输出。

验证操作 取消设置临时OS_AUTH_URL和OS_PASSWORD环境变量 此操作为了验证确保其它组件安装正常1 unset OS_AUTH_URL OS_PASSWORD

获取admin用户的令牌 1 2 3 4 5 6 7 8 9 10 11 12 13 openstack --os-auth-url http://controller:35357/v3 \ --os-project-domain-name Default --os-user-domain-name Default \ --os-project-name admin --os-username admin token issue Password: #密码配置为:ADMIN_PASS +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | expires | 2018-07-12T15:38:39+0000 | | id | gAAAAABbR2fvxfr47jzQWswuY_ tbelrEKaohloWokuHFVYTOYK_jX9jxy7g_ ijwL1fUGUwsBaF5qwLZDvjiQk8hl5R1lkm2b1dje_GG7bX3QRtbrp7m8B-aWvLyaje7yYB0nuXJv9P-HuZ7YVM8U8XRvrFjfaKOtvQ3DpyhF81djABYIeUTfJMg | | project_ id | 397ed95bb8d244fc924fb974235c6661 || user_id | fa5af0071d424540af93d9c919721dc3 | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

获取demo用户的令牌 1 2 3 4 5 6 7 8 9 10 11 12 13 openstack --os-auth-url http://controller:5000/v3 \ --os-project-domain-name Default --os-user-domain-name Default \ --os-project-name demo --os-username demo token issue Password: #密码配置为:DEMO_PASS +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | expires | 2018-07-12T15:39:49+0000 | | id | gAAAAABbR2g1PYBAtYoiE8yGss1-myGMbHnoMezSmYeBSyPbqFlTj87LnVdCv5J-_ s9-h4m35M1JZhJcT8bvVUunO4GsNheLLUod3ZshSgbkpFXbZqUCS9ul288_T8lapvG8Dt0i5CFNLPwQdlrcpuSvS3WpCceE1LDrqPIFrv0UJ4P6a0cxowc | | project_ id | fc0b58a87559424399e26f0e833f0429 || user_id | d441c74843ea4da9a21f90499cb43fb5 | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

创建 OpenStack 客户端环境脚本 创建 admin-openrc 1 2 3 4 5 6 7 8 9 10 cat << EOF > admin-openrc export OS_PROJECT_DOMAIN_NAME =Defaultexport OS_USER_DOMAIN_NAME =Defaultexport OS_PROJECT_NAME =adminexport OS_USERNAME =adminexport OS_PASSWORD =ADMIN_PASSexport OS_AUTH_URL =http://controller:5000/v3export OS_IDENTITY_API_VERSION =3export OS_IMAGE_API_VERSION =2EOF

创建 demo-openrc 1 2 3 4 5 6 7 8 export OS_PROJECT_DOMAIN_NAME =Defaultexport OS_USER_DOMAIN_NAME =Defaultexport OS_PROJECT_NAME =demoexport OS_USERNAME =demoexport OS_PASSWORD =DEMO_PASSexport OS_AUTH_URL =http://controller:5000/v3export OS_IDENTITY_API_VERSION =3export OS_IMAGE_API_VERSION =2

使用脚本,返回认证token 1 2 3 4 5 6 7 8 9 10 11 . admin-openrc openstack token issue +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | expires | 2018-07-12T15:45:11+0000 | | id | gAAAAABbR2l3EuIJ8ih_92Y_wjXlFR3DWO0NmOFuPKlXgfkY04rFvr4dun424ftukZR6stk4qFGZD_H6TYmr_obeth6BbKYNczz1D0TwjRq-R12OedXVAeHpdPymkEHwnMayCBkE9aynkYH7tbAKovMLjsPXM5RnQl6YHYBa6FdkFAZfLQdGm6A | | project_id | 397ed95bb8d244fc924fb974235c6661 | | user_id | fa5af0071d424540af93d9c919721dc3 | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

Glance 镜像服务 在 controller 执行https://docs.openstack.org/glance/queens/install/install-rdo.html

创建glance数据库并授权 1 2 3 4 mysql -u root -p CREATE DATABASE glance;GRANT ALL PRIVILEGES ON glance.* TO 'glance' @'localhost' IDENTIFIED BY 'GLANCE_DBPASS' ;GRANT ALL PRIVILEGES ON glance.* TO 'glance' @'%' IDENTIFIED BY 'GLANCE_DBPASS' ;

创建glance用户 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 . admin-openrc openstack user create --domain default --password-prompt glance User Password: #密码配置为:GLANCE_PASS Repeat User Password: +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | 7210fe22f28f483baec3fbd0a583300b | | name | glance | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+

添加 admin 角色到 glance 用户和 service 项目上 1 openstack role add --project service --user glance admin

创建glance服务 1 2 3 4 5 6 7 8 9 10 11 openstack service create --name glance --description "OpenStack Image" image +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | OpenStack Image | | enabled | True | | id | 3b955fc4bf384c098e4bc995967b52a9 | | name | glance | | type | image | +-------------+----------------------------------+

创建镜像服务的 API 端点 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne image public http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 49e262cbc7f8434ea55a0e18a59a479a | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 7d89071e87924ef5acb01be67eb3595e | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne image internal http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | d93e537348834231a9f4eda12e1f336e | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 7d89071e87924ef5acb01be67eb3595e | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne image admin http://controller:9292 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | fb2f66e0da7a4692a3db3446c8976256 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 7d89071e87924ef5acb01be67eb3595e | | service_name | glance | | service_type | image | | url | http://controller:9292 | +--------------+----------------------------------+

安装glance 1 yum install openstack-glance -y

配置glance-api.conf 快速处理脚本1 2 3 4 5 6 7 8 9 10 11 12 13 14 openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql: openstack-config --set /etc/glance/glance-api.conf // openstack-config /etc//glance-api.conf keystone_authtoken auth_url http: openstack-config --set //glance/ openstack-config /etc//glance-api.conf keystone_authtoken auth_type password openstack-config --set //glance/ openstack-config /etc//glance-api.conf keystone_authtoken user_domain_name default openstack-config --set //glance/ openstack-config /etc//glance-api.conf keystone_authtoken username glance openstack-config --set //glance/ openstack-config /etc//glance-api.conf paste_deploy flavor keystone openstack-config --set //glance/ openstack-config /etc//glance-api.conf glance_store default_store file openstack-config --set //glance/ /var//glance/

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 vim /etc/glance/glance-api.conf [database] connection = mysql+pymysql: [keystone_authtoken] auth_uri = http: auth_url = http: memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = GLANCE_PASS [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images

端口:9292

配置glance-registry.conf 快速处理脚本1 2 3 4 5 6 7 8 9 10 11 openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance :GLANCE_DBPASS @controller/glance openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_uri http://controller :5000 openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url http://controller :35357 openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers controller:11211 openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_name default openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name default openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password GLANCE_PASS openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 vim /etc/glance/glance-registry.conf [database] connection = mysql+pymysql: [keystone_authtoken] auth_uri = http: auth_url = http: memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = GLANCE_PASS [paste_deploy] flavor = keystone

端口:9191

初始化glance数据库 1 su -s /bin/sh -c "glance-manage db_sync" glance

启动glance并开机启动 1 2 systemctl enable openstack-glance-api.service openstack-glance-registry.service systemctl start openstack-glance-api.service openstack-glance-registry.service

验证操作 导入admin-openrc凭证 下载源镜像 1 wget http:// download.cirros-cloud.net/0.4.0/ cirros-0.4 .0 -x86_64-disk.img

上传镜像 使用 QCOW2 磁盘格式, bare 容器格式上传镜像到镜像服务并设置公共可见,这样所有的项目都可以访问它

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 openstack image create "cirros" \ --file cirros-0.4.0-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --public +------------------+------------------------------------------------------+ | Field | Value | +------------------+------------------------------------------------------+ | checksum | 443b7623e27ecf03dc9e01ee93f67afe | | container_format | bare | | created_at | 2018-07-13T02:46:27Z | | disk_format | qcow2 | | file | /v2/images/c1489536-e12d-4323-8fb5-14128f4d8d5e/file | | id | c1489536-e12d-4323-8fb5-14128f4d8d5e | | min_disk | 0 | | min_ram | 0 | | name | cirros | | owner | 397ed95bb8d244fc924fb974235c6661 | | protected | False | | schema | /v2/schemas/image | | size | 12716032 | | status | active | | tags | | | updated_at | 2018-07-13T02:46:28Z | | virtual_size | None | | visibility | public | +------------------+------------------------------------------------------+

查看上传的镜像 1 2 3 4 5 6 7 openstack image list +--------------------------------------+ --------+--------+ | ID | Name | Status | +--------------------------------------+ --------+--------+ | c1489536-e12d-4323-8fb5-14128f4d8d5e | cirros | active | +--------------------------------------+ --------+--------+

https://docs.openstack.org/glance/queens/configuration/index.html

Nova Controller 在controller控制节点执行https://docs.openstack.org/nova/queens/install/controller-install-rdo.html

创建nova等数据库并授权 创建nova_api, nova, nova_cell01 2 3 4 5 6 7 8 9 10 11 mysql -u root -p CREATE DATABASE nova_api;CREATE DATABASE nova;CREATE DATABASE nova_cell0;GRANT ALL PRIVILEGES ON nova_api.* TO 'nova' @'localhost' IDENTIFIED BY 'NOVA_DBPASS' ;GRANT ALL PRIVILEGES ON nova_api.* TO 'nova' @'%' IDENTIFIED BY 'NOVA_DBPASS' ;GRANT ALL PRIVILEGES ON nova.* TO 'nova' @'localhost' IDENTIFIED BY 'NOVA_DBPASS' ;GRANT ALL PRIVILEGES ON nova.* TO 'nova' @'%' IDENTIFIED BY 'NOVA_DBPASS' ;GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova' @'localhost' IDENTIFIED BY 'NOVA_DBPASS' ;GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova' @'%' IDENTIFIED BY 'NOVA_DBPASS' ;

创建nova 创建nova用户 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 . admin-openrc openstack user create --domain default --password-prompt nova User Password: #密码配置为:NOVA_PASS Repeat User Password: +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | c3cb8b7a6d9a4a65a603028d7a170a67 | | name | nova | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+

添加nova为管理员权限 1 openstack role add --project service --user nova admin

创建nova服务 1 2 3 4 5 6 7 8 9 10 11 openstack service create --name nova --description "OpenStack Compute" compute +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | OpenStack Compute | | enabled | True | | id | 584f29fd4e784c67b0ca7cfc7506cb13 | | name | nova | | type | compute | +-------------+----------------------------------+

创建nova api 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | b097b4d65bd847d18bfe7d113e3bf040 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 584f29fd4e784c67b0ca7cfc7506cb13 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | b097b4d65bd847d18bfe7d113e3bf040 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 584f29fd4e784c67b0ca7cfc7506cb13 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | a1d0c23b7bdd46d4bb8018338a40289b | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 584f29fd4e784c67b0ca7cfc7506cb13 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | +--------------+----------------------------------+

创建placement 创建Placement用户 1 2 3 4 5 6 7 8 9 10 11 12 13 14 openstack user create --domain default --password-prompt placement User Password: #密码配置为:PLACEMENT_PASS Repeat User Password: +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | 3d702a3aa8aa45e695bff7600a2511a2 | | name | placement | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+

添加placement用户为项目服务admin角色 1 openstack role add --project service --user placement admin

创建placement api服务 1 2 3 4 5 6 7 8 9 10 11 openstack service create --name placement --description "Placement API" placement +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | Placement API | | enabled | True | | id | 3b783948e92f4953808cc96a9e4eb848 | | name | placement | | type | placement | +-------------+----------------------------------+

创建placement api 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne placement public http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | c5c31a3c89444c0aab1163400f1cf9de | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 3b783948e92f4953808cc96a9e4eb848 | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne placement internal http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 2efc01499ec24037b5007df8507372e5 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 3b783948e92f4953808cc96a9e4eb848 | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne placement admin http://controller:8778 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 53fcdec1097e48789015ceba8b086bf0 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 3b783948e92f4953808cc96a9e4eb848 | | service_name | placement | | service_type | placement | | url | http://controller:8778 | +--------------+----------------------------------+

安装配置nova 安装nova组件 1 2 3 yum install openstack-nova-api openstack-nova-conductor \ openstack-nova-console openstack-nova-novncproxy \ openstack-nova-scheduler openstack-nova-placement-api -y

配置nova.conf 快速处理脚本1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql: openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql: openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit: openstack-config --set /etc/nova/nova.conf api auth_strategy keystone openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http: openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http: openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211 openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168 .7.11 openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt .firewall .NoopFirewallDriver openstack-config --set /etc/nova/nova.conf vnc enabled true openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0 .0.0 openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address \$my_ip openstack-config --set /etc/nova/nova.conf glance api_servers http: openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp openstack-config --set /etc/nova/nova.conf placement os_region_name RegionOne openstack-config --set /etc/nova/nova.conf placement project_domain_name Default openstack-config --set /etc/nova/nova.conf placement project_name service openstack-config --set /etc/nova/nova.conf placement auth_type password openstack-config --set /etc/nova/nova.conf placement user_domain_name Default openstack-config --set /etc/nova/nova.conf placement auth_url http: openstack-config --set /etc/nova/nova.conf placement username placement openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 vim /etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit: my_ip = 192.168 .7.11 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api_database] connection = mysql+pymysql: [database] connection = mysql+pymysql: [api] auth_strategy = keystone [keystone_authtoken] auth_url = http: memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = NOVA_PASS [vnc] enabled = true server_listen = 0.0 .0.0 server_proxyclient_address = $my_ip [glance] api_servers = http: [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http: username = placement password = PLACEMENT_PASS

配置httpd nova-placement-api.conf 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 cat << EOF > /etc/ httpd/conf.d/00 -nova-placement-api.conf Listen 8778 <VirtualHost *:8778 > WSGIProcessGroup nova-placement-api WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On WSGIDaemonProcess nova-placement-api processes=3 threads=1 user=nova group=nova WSGIScriptAlias / /usr/ bin/nova-placement-api <IfVersion > = 2.4 > ErrorLogFormat “%M” </IfVersion> ErrorLog /var/ log/nova/ nova-placement-api.log #SSLEngine On #SSLCertificateFile … #SSLCertificateKeyFile … </VirtualHost> Alias /nova-placement-api /usr/ bin/nova-placement-api <Location /nova-placement-api> SetHandler wsgi-script Options +ExecCGI WSGIProcessGroup nova-placement-api WSGIApplicationGroup %{GLOBAL} WSGIPassAuthorization On </Location> <Directory /usr/bin> <IfVersion > = 2.4 > Require all granted </IfVersion> <IfVersion < 2.4 > Order allow,deny Allow from all </IfVersion> </Directory> EOF

初始化数据库 初始化nova-api数据库 1 2 3 4 su -s /bin/sh -c "nova-manage api_db sync" nova /usr/lib /python2 .7/site -packages /oslo_db /sqlalchemy /enginefacade .py :332: NotSupportedWarning : Configuration option (s ) ['use_tpool '] not supported exception.NotSupportedWarning

注册cell0数据库 1 su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

创建cell1 cell 1 2 3 su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" novae1657af2 -a716-49 f7 -a3cf-a2eb71af7c80

初始化nova数据库 1 su -s /bin/sh -c "nova-manage db sync" nova

验证nova cell0和cell1 1 2 3 4 5 6 7 8 nova-manage cell_v2 list_cells +-------+--------------------------------------+------------------------------------+-------------------------------------------------+ | Name | UUID | Transport URL | Database Connection | +-------+--------------------------------------+------------------------------------+-------------------------------------------------+ | cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | | cell1 | e1657af2-a716-49f7-a3cf-a2eb71af7c80 | rabbit://openstack:****@controller | mysql+pymysql://nova:****@controller/nova | +-------+--------------------------------------+------------------------------------+-------------------------------------------------+

启动nove等并开机启动 1 2 3 4 systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service \ openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service systemctl start openstack-nova-api.service openstack-nova-consoleauth.service \ openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

Nova Compute 在compute计算节点执行https://docs.openstack.org/nova/queens/install/compute-install-rdo.html

安装nova组件 1 2 yum install openstack-nova-compute -y yum install openstack-utils -y

配置nova.conf 快速处理脚本1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit: openstack-config --set /etc/nova/nova.conf api auth_strategy keystone openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http: openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http: openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211 openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168 .7.12 openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt .firewall .NoopFirewallDriver openstack-config --set /etc/nova/nova.conf vnc enabled True openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0 .0.0 openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address \$my_ip openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http: openstack-config --set /etc/nova/nova.conf glance api_servers http: openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp openstack-config --set /etc/nova/nova.conf placement os_region_name RegionOne openstack-config --set /etc/nova/nova.conf placement project_domain_name Default openstack-config --set /etc/nova/nova.conf placement project_name service openstack-config --set /etc/nova/nova.conf placement auth_type password openstack-config --set /etc/nova/nova.conf placement user_domain_name Default openstack-config --set /etc/nova/nova.conf placement auth_url http: openstack-config --set /etc/nova/nova.conf placement username placement openstack-config --set /etc/nova/nova.conf placement password PLACEMENT_PASS

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 cat /etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:RABBIT_PASS@controller my_ip = 192.168.7.12 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = NOVA_PASS [vnc] enabled = True server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://controller:6080/vnc_auto.html [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS

验证是否支持虚拟化1 2 3 egrep -c '(vmx|svm)' /proc /cpuinfo 如果没有输出或者输出0,说明不支持虚拟化,需要使用libvirt而不能用kvm了,如果这样的话更改如下配置; openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

启动nova-compute服务并开机启动 1 2 systemctl enable libvirtd.service openstack-nova-compute.service systemctl start libvirtd.service openstack-nova-compute.service

Nova Controller配置Compute 将compute节点添加到cell数据库 在controller控制节点执行

确认数据库中有计算主机 1 2 3 4 5 6 7 8 . admin-openrc openstack compute service list --service nova-compute +---- +-------------- +--------- +------ +--------- +------- +---------------------------- + | ID | Binary | Host | Zone | Status | State | Updated At | +---- +-------------- +--------- +------ +--------- +------- +---------------------------- + | 6 | nova-compute | compute | nova | enabled | up | 2018-07-13T12:37 :37.000000 | +---- +-------------- +--------- +------ +--------- +------- +---------------------------- +

发现计算主机 1 2 3 4 5 6 7 8 9 10 su -s /bin/ sh -c "nova-manage cell_v2 discover_hosts --verbose" nova /usr/ lib/python2.7/ site-packages/oslo_db/ sqlalchemy/enginefacade.py: 332 : NotSupportedWarning: Configuration option(s) ['use_tpool' ] not supported exception.NotSupportedWarning Found 2 cell mappings. Skipping cell0 since it does not contain hosts. Getting computes from cell 'cell1' : e1657af2-a716-49 f7-a3cf-a2eb71af7c80 Checking host mapping for compute host 'compute' : fe58ddc1-1 d65-4 f87-9456 -bc040dc106b3 Creating host mapping for compute host 'compute' : fe58ddc1-1 d65-4 f87-9456 -bc040dc106b3 Found 1 unmapped computes in cell: e1657af2-a716-49 f7-a3cf-a2eb71af7c80

添加新计算节点时,必须在控制器节点上运行nova-manage cell_v2 discover_hosts以注册这些新计算节点。1 openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 300

验证Compute服务 查看计算服务列表 1 2 3 4 5 6 7 8 9 10 11 . admin-openrc openstack compute service list +----+------------------+------------+----------+---------+-------+----------------------------+ | ID | Binary | Host | Zone | Status | State | Updated At | +----+------------------+------------+----------+---------+-------+----------------------------+ | 3 | nova-consoleauth | controller | internal | enabled | up | 2018-07-15T15:04:09.000000 | | 4 | nova-scheduler | controller | internal | enabled | up | 2018-07-15T15:04:13.000000 | | 5 | nova-conductor | controller | internal | enabled | up | 2018-07-15T15:04:08.000000 | | 6 | nova-compute | compute | nova | enabled | up | 2018-07-15T15:04:13.000000 | +----+------------------+------------+----------+---------+-------+----------------------------+

查看api列表 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 openstack catalog: true +-----------+-----------+-----------------------------------------+ | Name | Type | Endpoints | +-----------+-----------+-----------------------------------------+ | keystone | identity | RegionOne | | | | internal: http://controller:5000/v3/ | | | | RegionOne | | | | admin: http://controller:35357/v3/ | | | | RegionOne | | | | public: http://controller:5000/v3/ | | | | | | placement | placement | RegionOne | | | | internal: http://controller:8778 | | | | RegionOne | | | | admin: http://controller:8778 | | | | RegionOne | | | | public: http://controller:8778 | | | | | | nova | compute | RegionOne | | | | internal: http://controller:8774/v2.1 | | | | RegionOne | | | | admin: http://controller:8774/v2.1 | | | | RegionOne | | | | public: http://controller:8774/v2.1 | | | | | | glance | image | RegionOne | | | | public: http://controller:9292 | | | | RegionOne | | | | internal: http://controller:9292 | | | | RegionOne | | | | admin: http://controller:9292 | | | | | +-----------+-----------+-----------------------------------------+

查看镜像列表 1 2 3 4 5 6 7 openstack image list +--------------------------------------+ --------+--------+ | ID | Name | Status | +--------------------------------------+ --------+--------+ | c1489536-e12d-4323-8fb5-14128f4d8d5e | cirros | active | +--------------------------------------+ --------+--------+

检查cells和placement API是否正常 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 nova-status upgrade check /usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_ tpool'] not supported exception.NotSupportedWarning Option "os_region_ name" from group "placement" is deprecated. Use option "region-name" from group "placement". +-------------------------------------------------------------------+ | Upgrade Check Results | +-------------------------------------------------------------------+ | Check: Cells v2 | | Result: Success | | Details: None | +-------------------------------------------------------------------+ | Check: Placement API | | Result: Success | | Details: None | +-------------------------------------------------------------------+ | Check: Resource Providers | | Result: Warning | | Details: There are no compute resource providers in the Placement | | service but there are 1 compute nodes in the deployment. | | This means no compute nodes are reporting into the | | Placement service and need to be upgraded and/or fixed. | | See | | https://docs.openstack.org/nova/latest/user/placement.html | | for more details. | +-------------------------------------------------------------------+ | Check: Ironic Flavor Migration | | Result: Success | | Details: None | +-------------------------------------------------------------------+ | Check: API Service Version | | Result: Success | | Details: None | +-------------------------------------------------------------------+

Neutron 网络服务 在controller节点执行https://docs.openstack.org/neutron/queens/install/install-rdo.html

创建nuetron数据库并授权 1 2 3 4 mysql -u root -p CREATE DATABASE neutron;GRANT ALL PRIVILEGES ON neutron.* TO 'neutron' @'localhost' IDENTIFIED BY 'NEUTRON_DBPASS' ;GRANT ALL PRIVILEGES ON neutron.* TO 'neutron' @'%' IDENTIFIED BY 'NEUTRON_DBPASS' ;

创建neutron 创建neutron用户 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 . admin-openrc openstack user create --domain default --password-prompt neutron User Password: #配置密码为:NEUTRON_PASS Repeat User Password: +---------------------+----------------------------------+ | Field | Value | +---------------------+----------------------------------+ | domain_id | default | | enabled | True | | id | 3528d4035b0c41979501a72f69934d7c | | name | neutron | | options | {} | | password_expires_at | None | +---------------------+----------------------------------+

添加admin角色为neutron用户 1 openstack role add --project service --user neutron admin

创建neutron服务 1 2 3 4 5 6 7 8 9 10 11 openstack service create --name neutron --description "OpenStack Networking" network +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | OpenStack Networking | | enabled | True | | id | 5970a241f2b7427ba369287b55d00575 | | name | neutron | | type | network | +-------------+----------------------------------+

创建neutron api 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne network public http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 2d48f4da21384a5593a55c38787d3a25 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 5970a241f2b7427ba369287b55d00575 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne network internal http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 2b41f0ff8a874b738587e52391e2a86f | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 5970a241f2b7427ba369287b55d00575 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 openstack endpoint create --region RegionOne network admin http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 4ac9b4d66b2d4859a0c7acc045b772bc | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 5970a241f2b7427ba369287b55d00575 | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+

配置controller网络选项 在controller控制节点执行https://docs.openstack.org/neutron/queens/install/controller-install-rdo.html 1 yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

配置 neutron.conf 快速处理脚本1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron :NEUTRON_DBPASS @controller/neutron openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2 openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack :RABBIT_PASS @controller openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller :5000 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller :35357 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211 openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller :35357 openstack-config --set /etc/neutron/neutron.conf nova auth_type password openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne openstack-config --set /etc/neutron/neutron.conf nova project_name service openstack-config --set /etc/neutron/neutron.conf nova username nova openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 vim /etc/neutron/neutron.conf [database] connection = mysql+pymysql: [DEFAULT] core_plugin = ml2 service_plugins = transport_url = rabbit: auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] auth_uri = http: auth_url = http: memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [nova] auth_url = http: auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = NOVA_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp

配置网络二层插件 快速处理脚本1 2 3 4 5 6 openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini openstack-config /etc//plugins//ml2_conf.ini ml2 mechanism_drivers linuxbridge openstack-config --set //neutron//ml2/ openstack-config /etc//plugins//ml2_conf.ini ml2_type_flat flat_networks provider openstack-config --set //neutron//ml2/

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 vim /etc/neutron/plugins/ml2/ml2_conf.ini [ml2] type_drivers = flat,vlan tenant_network_types = hanism_drivers = linuxbridge extension_drivers = port_security [ml2_type_flat] flat_networks = provider [securitygroup] enable_ipset = true

配置桥接网络(Provider) Provider network : https://docs.openstack.org/install-guide/launch-instance-networks-selfservice.html 1 2 3 4 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini openstack-config /etc//plugins//linuxbridge_agent.ini securitygroup enable_security_group true openstack-config --set //neutron//ml2/

手动编辑1 2 3 4 5 6 7 8 9 10 11 vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:eth0 [vxlan] enable_vxlan = false [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

通过验证以下所有sysctl值都设置为1,确保您的Linux操作系统内核支持网桥过滤器1 2 3 4 5 modprobe br_netfilter #lsmod |grep br_netfilter sysctl -p net .bridge.bridge-nf-call -iptablesnet .bridge.bridge-nf-call -ip6tables

配置DHCP 快速处理脚本1 2 3 openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridge openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

手动编辑1 2 3 4 5 6 vim /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true

快速处理脚本1 2 openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_ip controller openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret METADATA_SECRET

手动编辑1 2 3 4 5 vim /etc/netutron/metadata_agent.ini [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = METADATA_SECRET

配置compute服务使用网络 在controller控制节点执行https://docs.openstack.org/neutron/queens/install/compute-install-rdo.html

配置nova.conf 1 2 3 4 5 6 7 8 9 10 11 openstack-config --set /etc/nova/nova.conf neutron url http://controller :9696 openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller :35357 openstack-config --set /etc/nova/nova.conf neutron auth_type password openstack-config --set /etc/nova/nova.conf neutron project_domain_name default openstack-config --set /etc/nova/nova.conf neutron user_domain_name default openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne openstack-config --set /etc/nova/nova.conf neutron project_name service openstack-config --set /etc/nova/nova.conf neutron username neutron openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy true openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 14 vim /etc/nova/nova.conf [neutron] url = http: auth_url = http: auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS service_metadata_proxy = true metadata_proxy_shared_secret = METADATA_SECRET

配置与启动 创建服务软连接 1 ln -s /etc/ neutron/plugins/m l2/ml2_conf.ini / etc/neutron/ plugin.ini

初始化数据库 1 su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

重启compute api服务 1 systemctl restart openstack-nova-api .service

启动网络服务并开机启动 1 2 3 4 systemctl enable neutron-server.service neutron-linuxbridge-agent.service \ neutron-dhcp-agent.service neutron-metadata-agent.service systemctl start neutron-server.service neutron-linuxbridge-agent.service \ neutron-dhcp-agent.service neutron-metadata-agent.service

配置compute节点网络服务 在compute计算节点执行

安装neutron 1 yum install openstack-neutron-linuxbridge ebtables ipset -y

配置 neutron.conf 快速处理脚本1 2 3 4 5 6 7 8 9 10 11 12 openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit: openstack-config --set /etc/neutron/neutron.conf openstack-config /etc//neutron.conf keystone_authtoken auth_uri http: openstack-config --set //neutron/// openstack-config /etc//neutron.conf keystone_authtoken memcached_servers controller:11211 openstack-config --set //neutron/ openstack-config /etc//neutron.conf keystone_authtoken project_domain_name default openstack-config --set //neutron/ openstack-config /etc//neutron.conf keystone_authtoken project_name service openstack-config --set //neutron/ openstack-config /etc//neutron.conf keystone_authtoken password NEUTRON_PASS openstack-config --set //neutron/ /var//neutron/

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 vim /etc/neutron/neutron.conf [DEFAULT] transport_url = rabbit: auth_strategy = keystone [keystone_authtoken] auth_uri = http: auth_url = http: memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp

配置网络(Provider) Provider network : https://docs.openstack.org/install-guide/launch-instance-networks-selfservice.html 1 2 3 4 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:eth0 openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini openstack-config /etc//plugins//linuxbridge_agent.ini securitygroup enable_security_group true openstack-config --set //neutron//ml2/

手动编辑1 2 3 4 5 6 7 8 9 10 11 vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:eth0 [vxlan] enable_vxlan = false [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置计算节点nova网络服务 快速处理脚本1 2 3 4 5 6 7 8 9 openstack-config --set /etc/nova/nova.conf neutron url http: openstack-config --set /etc/nova/nova.conf // openstack-config /etc//nova.conf neutron auth_type password openstack-config --set //nova/ openstack-config /etc//nova.conf neutron user_domain_name default openstack-config --set //nova/ openstack-config /etc//nova.conf neutron project_name service openstack-config --set //nova/ openstack-config /etc//nova.conf neutron password NEUTRON_PASS

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 vim /etc/nova/nova.conf [neutron] url = http: auth_url = http: auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS

完成安装配置 重启compute服务 1 systemctl restart openstack-nova-compute .service

启动网桥服务并开机启动 1 2 systemctl enable neutron-linuxbridge-agent .service systemctl start neutron-linuxbridge-agent .service

Horizon dashboard 在controller控制节点执行https://docs.openstack.org/horizon/queens/install/

安装 1 yum install openstack-dashboard -y

配置 local_settings 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 vim /etc/openstack-dashboard/local_settings OPENSTACK_HOST = "controller" OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user" OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True SESSION_ENGINE = 'django.contrib.sessions.backends.cache' CACHES = { 'default' : { 'BACKEND' : 'django.core.cache.backends.memcached.MemcachedCache' , 'LOCATION' : 'controller:11211' , } } OPENSTACK_API_VERSIONS = { "identity" : 3 , "image" : 2 , "volume" : 2 , } OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default" ALLOWED_HOSTS = ['*' ] OPENSTACK_NEUTRON_NETWORK = { 'enable_router' : False, 'enable_quotas' : False, 'enable_distributed_router' : False, 'enable_ha_router' : False, 'enable_lb' : False, 'enable_firewall' : False, 'enable_vpn' : False, 'enable_fip_topology_check' : False, } TIME_ZONE = "Asia/Shanghai"

配置httd dashboard 1 2 3 vim /etc/httpd/conf.d/openstack-dashboard.conf WSGIApplicationGroup %{GLOBAL}

重启httpd memcached 1 systemctl restart httpd .service memcached .service

在浏览器输入http://192.168.7.11/dashboard.,访问openstack的web页面

启动一个实例 下载系统镜像 1 wget https://mi rrors.aliyun.com/centos/ 7 /isos/ x86_64/CentOS-7-x86_64-Minimal-1804.iso

创建镜像 1 2 openstack image create "centos7_x64" --file CentOS-7 -x86_64-Minimal-1804. iso \ --disk-format qcow2 --container -format bare --public

创建网络 基于 provider networks1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 . admin-openrc openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider +---------------------------+--------------------------------------+ | Field | Value | +---------------------------+--------------------------------------+ | admin_state_up | UP | | availability_zone_hints | | | availability_zones | | | created_at | 2018-07-18T09:16:24Z | | description | | | dns_domain | None | | id | a1f3ed6e-f6f3-4b7a-8df8-0be329a913c5 | | ipv4_address_scope | None | | ipv6_address_scope | None | | is_default | None | | is_vlan_transparent | None | | mtu | 1500 | | name | provider | | port_security_enabled | True | | project_id | 397ed95bb8d244fc924fb974235c6661 | | provider:network_type | flat | | provider:physical_network | provider | | provider:segmentation_id | None | | qos_policy_id | None | | revision_number | 4 | | router:external | External | | segments | None | | shared | True | | status | ACTIVE | | subnets | | | tags | | | updated_at | 2018-07-18T09:16:25Z | +---------------------------+--------------------------------------+

参数

创建子网 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 openstack subnet create --network provider --allocation-pool start=192.168.7.30,end=192.168.7.200 \ --dns-nameserver 192.168.7.2 --gateway 192.168.7.2 --subnet-range 192.168.7.0/24 provider +-------------------+--------------------------------------+ | Field | Value | +-------------------+--------------------------------------+ | allocation_pools | 192.168.10.30-192.168.10.200 | | cidr | 192.168.10.0/24 | | created_at | 2018-07-18T09:18:21Z | | description | | | dns_nameservers | 223.5.5.5 | | enable_dhcp | True | | gateway_ip | 192.168.10.1 | | host_routes | | | id | 8426cc1e-7682-4dfb-b509-4d8a6338cf4b | | ip_version | 4 | | ipv6_address_mode | None | | ipv6_ra_mode | None | | name | provider | | network_id | a1f3ed6e-f6f3-4b7a-8df8-0be329a913c5 | | project_id | 397ed95bb8d244fc924fb974235c6661 | | revision_number | 0 | | segment_id | None | | service_types | | | subnetpool_id | None | | tags | | | updated_at | 2018-07-18T09:18:21Z | +-------------------+--------------------------------------+

创建flavor 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano +----------------------------+---------+ | Field | Value | +----------------------------+---------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | disk | 1 | | id | 0 | | name | m1.nano | | os-flavor-access:is_public | True | | properties | | | ram | 64 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+---------+

控制节点生成秘钥对,在启动实例之前,需要将公钥添加到Compute服务 1 2 3 4 . demo-openrc ssh-keygen -q -N "" Enter file in which to save the key (/root/.ssh/id_rsa):

在openstack上创建密钥对1 2 3 4 5 6 7 8 9 openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey +-------------+-------------------------------------------------+ | Field | Value | +-------------+-------------------------------------------------+ | fingerprint | 7a:ee:60:81:1a:62:0f:6a:df:a3:7e:f2:72:a3:7a:dd | | name | mykey | | user_ id | fa5af0071d424540af93d9c919721dc3 |+-------------+ -------------------------------------------------+

查看1 2 3 4 5 6 7 openstack keypair list +-------+ -------------------------------------------------+| Name | Fingerprint | +-------+ -------------------------------------------------+| mykey | 7a:ee:60:81:1a:62:0f:6a:df:a3:7e:f2:72:a3:7a:dd | +-------+ -------------------------------------------------+

添加安全组 允许ICMP(ping) 1 openstack security group rule create --proto icmp default

允许SSH 1 openstack security group rule create --proto tcp --dst-port 22 default

查看 查看关联和子网 1 2 neutron net -list neutron subnet-list

查看flavor 1 2 3 4 5 6 7 8 9 10 11 12 openstack flavor list +----+-----------+-------+------+-----------+-------+-----------+ | ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public | +----+-----------+-------+------+-----------+-------+-----------+ | 0 | m1.nano | 64 | 1 | 0 | 1 | True | | 1 | m1.tiny | 512 | 1 | 0 | 1 | True | | 2 | m1.small | 2048 | 20 | 0 | 1 | True | | 3 | m1.medium | 4096 | 40 | 0 | 2 | True | | 4 | m1.large | 8192 | 80 | 0 | 4 | True | | 5 | m1.xlarge | 16384 | 160 | 0 | 8 | True | +----+-----------+-------+------+-----------+-------+-----------+

查看镜像 1 2 3 4 5 6 7 8 openstack image list +--------------------------------------+-----------------+--------+ | ID | Name | Status | +--------------------------------------+-----------------+--------+ | a824235a-7a9f-4a73-afe1-c5bd5b06898c | CentOS-7-x86_64 | active | | c1489536-e12d-4323-8fb5-14128f4d8d5e | cirros | active | +--------------------------------------+-----------------+--------+

查看网络 1 2 3 4 5 6 openstack network list +--------------------------------------+ ----------+--------------------------------------+ | ID | Name | Subnets | +--------------------------------------+ ----------+--------------------------------------+ | a1f3ed6e-f6f3-4b7a-8df8-0be329a913c5 | provider | 8426cc1e-7682-4dfb-b509-4d8a6338cf4b | +--------------------------------------+ ----------+--------------------------------------+

查看安全组 1 2 3 4 5 6 7 openstack security group list +--------------------------------------+---------+------------------------+----------------------------------+ | ID | Name | Description | Project | +--------------------------------------+---------+------------------------+----------------------------------+ | f666c3dc-4183-4acc-a32b-ed49f82536b5 | default | Default security group | 397ed95bb8d244fc924fb974235c6661 | +--------------------------------------+---------+------------------------+----------------------------------+

创建虚拟机 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 openstack server create --flavor m1.nano --image cirros \ --nic net-id=a1f3ed6e-f6f3-4b7a-8df8-0be329a913c5 --security-group default \ --key-name mykey provider-instance +-------------------------------------+-----------------------------------------------+ | Field | Value | +-------------------------------------+-----------------------------------------------+ | OS-DCF:diskConfig | MANUAL | | OS-EXT-AZ:availability_zone | | | OS-EXT-SRV-ATTR:host | None | | OS-EXT-SRV-ATTR:hypervisor_hostname | None | | OS-EXT-SRV-ATTR:instance_name | | | OS-EXT-STS:power_state | NOSTATE | | OS-EXT-STS:task_state | scheduling | | OS-EXT-STS:vm_state | building | | OS-SRV-USG:launched_at | None | | OS-SRV-USG:terminated_at | None | | accessIPv4 | | | accessIPv6 | | | addresses | | | adminPass | cAWzm9n2qcxR | | config_drive | | | created | 2018-07-18T09:55:51Z | | flavor | m1.nano (0) | | hostId | | | id | 49561d99-69a4-40ea-902a-f6bdd7cf1aae | | image | cirros (c1489536-e12d-4323-8fb5-14128f4d8d5e) | | key_name | mykey | | name | provider-instance | | progress | 0 | | project_id | 397ed95bb8d244fc924fb974235c6661 | | properties | | | security_groups | name='f666c3dc-4183-4acc-a32b-ed49f82536b5' | | status | BUILD | | updated | 2018-07-18T09:55:51Z | | user_id | fa5af0071d424540af93d9c919721dc3 | | volumes_attached | | +-------------------------------------+-----------------------------------------------+

查看实例状态 1 2 3 4 5 6 7 openstack server list +--------------------------------------+ -------------------+--------+ ------------------------+--------+ ---------+| ID | Name | Status | Networks | Image | Flavor | +--------------------------------------+ -------------------+--------+ ------------------------+--------+ ---------+| 49561d99-69a4-40ea-902a-f6bdd7cf1aae | provider-instance | BUILD | provider=192.168.7.33 | cirros | m1.nano | +--------------------------------------+ -------------------+--------+ ------------------------+--------+ ---------+

查看vnc 1 openstack console url show e49561d99-69a4-40ea-902a-f6bdd7cf1aae

Cinder 存储 在cinder执行https://docs.openstack.org/cinder/queens/install/index-rdo.html

安装lvm 1 yum install lvm2 device-mapper-persistent-data -y

启动lvm并开机启动 1 2 systemctl enable lvm2-lvmetad .service systemctl start lvm2-lvmetad .service

创建lvm物理卷 1 2 3 pvcreate /dev/sdb Physical volume "/dev/sdb" successfully created.

创建lvm卷组cinder-volumes 1 2 3 vgcreate cinder-volumes /dev/sdb Volume group "cinder-volumes" successfully created

只有实例才能访问块存储卷。但是,底层操作系统管理与卷关联的设备。默认情况下,lvm卷扫描工具会在/ dev目录中扫描包含卷的块存储设备。如果项目在其卷上使用lvm,则扫描工具会检测这些卷并尝试对其进行缓存,这可能会导致底层操作系统和项目卷出现各种问题。您必须重新配置lvm以仅扫描包含cinder-volumes卷组的设备。编辑/etc/lvm/lvm.conf文件并完成以下操作

在devices部分中,添加一个接受/ dev / sdb设备的过滤器并拒绝所有其他设备1 2 3 4 vim /etc/lvm/lvm.conf devices { .. .filter = [ "a/sdb/" , "r/.*/" ]

安装cinder 1 yum install openstack-cinder targetcli python-keystone -y

配置cinder.conf 快速处理脚本1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config openstack-config

手动编辑1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 vim /etc/cinder/cinder.conf [database] connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone my_ip = 192.168.7.13 enabled_backends = lvm glance_api_servers = http://controller:9292 [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = CINDER_PASS [lvm] volume_driver = cinder.volume.drivers.lvm.lvmVolumeDriver volume_group = cinder-volumes iscsi_protocol = iscsi iscsi_helper = lioadm [oslo_concurrency] lock_path = /var/lib/cinder/tmp

启动cinder并开机启动 1 2 systemctl enable openstack-cinder-volume.service target.service systemctl start openstack-cinder-volume.service target.service

报错解决 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@controller ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone Traceback (most recent call last): File "/usr/bin/keystone-manage" , line 6, in <module> from keystone.cmd.manage import main .. . from oslo_messaging import server as msg_server File "/usr/lib/python2.7/site-packages/oslo_messaging/server.py" , line 35, in <module> from oslo_service import service File "/usr/lib/python2.7/site-packages/oslo_service/__init__.py" , line 15, in <module> import eventlet.patcher ImportError: No module named eventlet.patcher ImportError: cannot import name DependencyWarning 解决: pip uninstall eventlet pip install eventlet pip uninstall requests pip install requests

1 2 3 4 5 6 7 8 9 MessageDeliveryFailure : Unable to connect to AMQP server on controller:5672 after None tries: Connection.open: (530) NOT_ALLOWED - access to vhost '/' refused for user 'openstack'2018-07-13 18:56:27.161 8998 ERROR oslo.messaging._drivers.impl_rabbit [req-2060b838-a821-449e-a0eb-9054317d61b0 - - - - -] Unable to connect to AMQP server on controller:5672 after None tries: (0, 0): (403) ACCESS_REFUSED - Login was refused using authentication mechanism AMQPLAIN. For details see the broker logfile.: AccessRefused: (0, 0): (403) ACCESS_REFUSED - Login was refused using authentication mechanism AMQPLAIN. For details see the broker logfile. 解决: ##yum remove rabbitmq-server ##yum install rabbitmq-server rabbitmqctl add_user openstack RABBIT_PASS rabbitmqctl set_permissions openstack ".*" ".*" ".*"

1 2 3 4 5 6 7 systemctl restart openstack-nova-compute 无法启动(计算节点) 2018-07-13 19:25:13.457 9924 WARNING nova.conductor.api [req-e4842974-bdd9-482a-bc4d-945399ed2be4 - - - - -] Timed out waiting for nova-conductor. Is it running? Or did this service start before nova-conductor? Reattempting establishment of nova-conductor connection.. .: MessagingTimeout: Timed out waiting for a rep 解决: 检查控制节点 nova配置文件 nove服务是否正常启动 查看nova所有日志 systemctl status openstack-nova-api openstack-nova-consoleauth \ openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy

1 2 3 4 5 6 7 8 9 10 11 12 openstack compute service list --service nova-compute The server is currently unavailable. Please try again at a later time.<br /><br /> (HTTP 503) (Request-ID: req-c8597707-5788-4e68-9fc0-f49764ada304) 解决: 检查配置文件中[keystone_authtoken]设置是否有误 尤其是密码 cat /etc/nova/nova.conf [keystone_authtoken] password = NOVA_PASS systemctl restart openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

1 2 3 4 5 Error: Invalid service catalog: true 错误:无效的服务目录:image 解决: openstack catalog: true

1 2 3 4 5 6 7 Multiple service matches found for ‘identity’, use an ID to be more specific. 解决: #openstack endpoint list # 查看列表 #openstack endpoint delete 'id' # 利用ID 删除API 端点 openstack service list # 查看服务列表 openstack server delete 'id' # 删除服务ID

https://www.redhat.com/zh/topics/openstack https://wsgzao.github.io/post/openstack/ https://www.jianshu.com/p/ba2127afc380 https://www.abcdocker.com/abcdocker/1793 https://github.com/meetbill/openstack_install/blob/master/scripts/compute/install_nova.sh http://blog.51cto.com/liuleis/2094190 https://docs.openstack.org/install-guide/openstack-services.html#minimal-deployment-for-queens https://www.imooc.com/article/28873